Cross-posted with Prawfsblog

I am pleased to announce the release of the Forward-Looking Academic Impact Rankings (FLAIR) for US law schools for 2023. I began this project two years ago because of my intense frustration that my law faculty (Loyola Chicago, at the time) had yet again been left out of the Sisk Rankings. The project has evolved and matured since then, and the design of the FLAIR rankings owes a great deal to debates that I have had with Prof. Gregory Sisk, partly in public, but mostly in private.

You can download the full draft paper from SSRN or wait for it to come out in the Florida State University Law Review.

How do the FLAIR rankings work?

I combined individual five-year citation data from HeinOnline with faculty lists scraped directly from almost 200 Law school websites to calculate the mean and median five-year citation numbers for every ABA accredited law school. Yes, that was a lot of work. Based on faculty websites, hiring announcements, and other data sources, I excluded assistant professors and faculty who began their tenure-track career in 2017 or later. I also limited the focus to what is traditionally considered to be the “doctrinal” faculty. The paper provides more details and the rationales for both of these decisions.

How do the FLAIR rankings compare to other law school rankings?

Among their many flaws, the U.S News law school rankings rely on poorly designed, highly subjective surveys to gauge “reputational strength,” rather than looking to easily available, objective citation data that is more valid and reliable. Would-be usurpers of U.S. News use better data but make other arbitrary choices that limit and distort their rankings. One flaw common to U.S. News and those who would displace it is the fetishization of minor differences in placement that do not reflect actual differences in substance. In my view, this information is worse than trivial: it is actively misleading.

The FLAIR rankings use objective citation data that is more valid and reliable than the U.S. News surveys, and unlike the Sisk rankings, FLAIR gives every ABA accredited law school a chance have the work of its faculty considered. Obviously, it is much fairer to assess every school rather than arbitrarily excluding some based on an intuition (a demonstrably faulty intuition at that) that particular schools have no chance to ranking the top X%. Well, it’s obvious to me at least. But perhaps more importantly, looking out all the data gives us a valid context to assess individual data points. The FLAIR rankings are designed to convey relevant distinctions without placing undue emphasis on minor differences in rank that are substantively unimportant. This goes against the horserace mentality that drives so much interest in U.S. News, but I’m not here to sell anything.

What are the relevant distinctions?

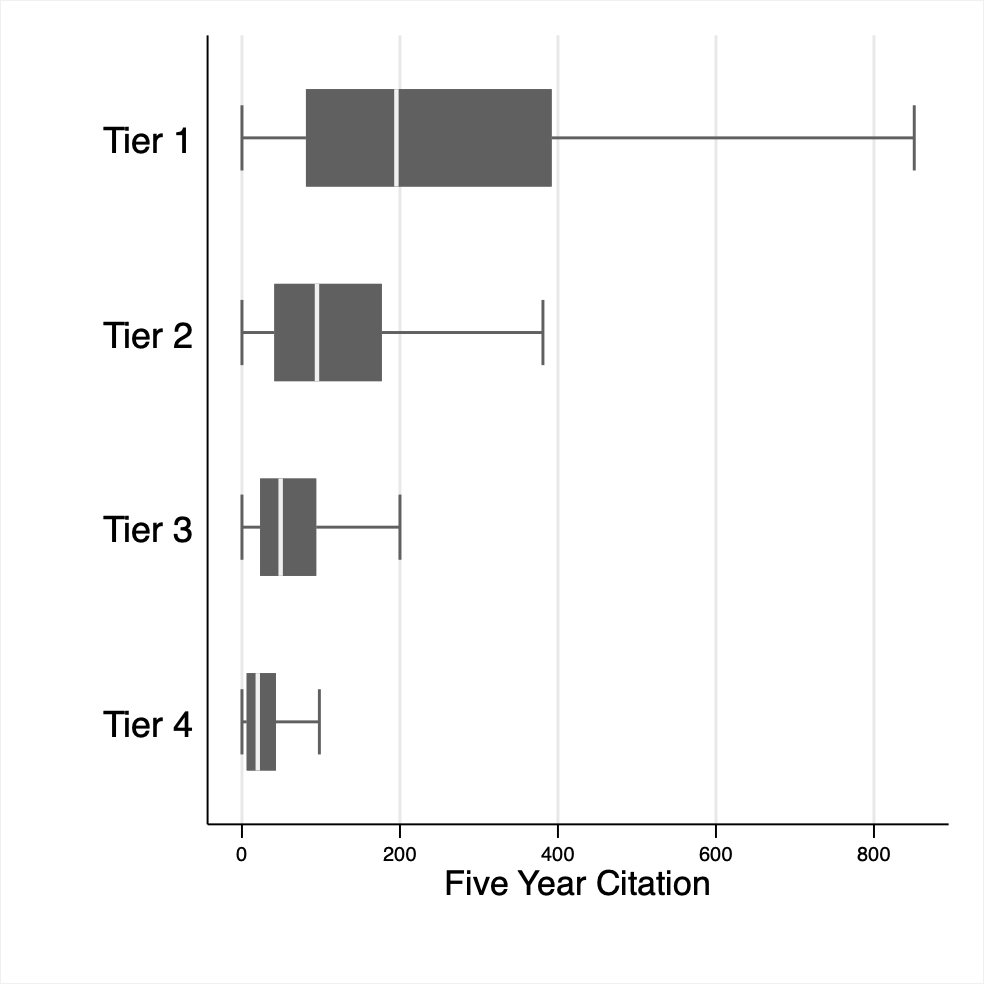

The FLAIR rankings assign law faculties to four separate tiers based on how their mean and median five-year citation counts compared to the standard deviation of the means and mediums of all faculties. Tier 1 is made up of those faculties that are more than one standard deviation above the mean, Tier 2 is between zero and one standard deviations above the mean, Tier 3 ranges from the mean to half a standard deviation below, and Tier 4 includes all of the schools more than half a standard deviation below the mean. In other words, Tier 1 schools are exceptional, Tier 2 schools are above average, Tier 3 are below average, and Tier 4 are well-below average.

The figure below illustrates a boxplot for the distribution of citation counts for each tier. (There is a more complete explanation in the paper, but essentially, the middle of the boxplot is the median, the box around the median is the middle 50%, and the “whiskers” at either and are the lowest/highest 25%.) The boxplot figure below illustrates the substantial differences between the tiers, but it also underscores that there is nonetheless considerable overlap between tiers.

The FLAIR rankings

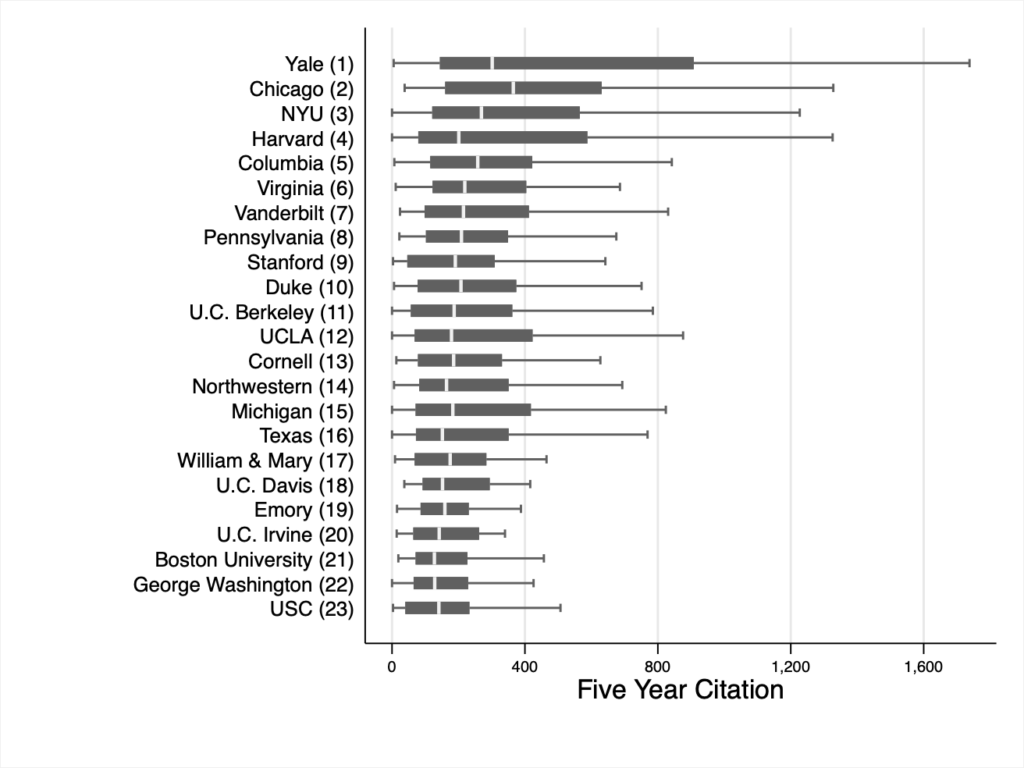

The next figure focuses on Tier 1. The FLAIR rank for each school is indicated in parentheses. The boxplot next to each school’s name indicates the distribution of citations for each doctrinal faculty member within that school.

Readers who pay close attention to the U.S. News rankings will note that the top tier consists of 23 schools, not the much vaunted “T14”. The T14 is a meaningless category; it does not reflect any current empirical reality or any substantial differences between the 14th and 15th rank. Attentive readers will also note that several schools well outside of the (hopefully now discredited concept of the) T14—namely U.C. Irvine, U.C. Davis, Emory, William & Mary, and George Washington—are in the top tier. These schools’ academic impact outpaces their overall U.S. News rankings significantly. U.C. Davis outperforms its U.S. News ranking by 42 places!

Looking at the top tier of the FLAIR rankings as visualized in the figure above also illustrates how misleading ordinal differences in ranking can be. There is very little difference between Virginia, Vanderbilt, and the University of Pennsylvania in terms of academic impact. The medians and the general distribution of each of these faculties are quite similar. And thus we can conclude that differences between ranks 6 and 8 are unimportant and that it is not news if Virginia “drops” to 8th or Pennsylvania rises to 6th in the FLAIR rankings, or indeed in the U.S. News rankings.

The differences that matter, and those that don’t

In the Olympics, third place is a bronze medal, and fourth place is nothing; but there are no medals in the legal academy and there is no difference in academic impact between third and fourth that is worth talking about. Minor differences in placement rarely correspond to differences in substance. Accordingly, rather than emphasizing largely irrelevant ordinal comparisons between schools only a few places apart, what we should really focus on is which tier in the rankings a school belongs to. Moreover, even when a difference in ranking suggests that there is a genuine difference in the overall academic impact of one faculty versus another, those aggregate differences say very little about the academic impact of individual faculty members. There is a lot of variation within faculties!

Objections to quantification

Many readers will object to any attempt to quantify academic impact, or to the use of data from HeinOnline specifically. Some of these objections make sense in relation to assessing individuals, but I don’t think that any of them retain much force when applied to assessing faculties as a whole. If we are really interested in the impact of individual scholars, we need to assess a broad range of objective evidence in context; that context comes from reading their work and understanding the field as whole. In contrast, no one could be expected to read the works of an entire faculty to get a sense of its academic influence. Indeed, citation counts, or other similarly reductive measures are the only feasible way to make between-faculty comparisons with any degree of rigor. What is more, aggregating the data at the faculty level reduces the impact of individual distortions, much like a mutual fund reduces the volatility associated with individual stocks.

One thing I should be very clear about is that academic impact is not the same thing as quality or merit. This is important because, although I think that the data can be an important tool for overcoming bias, I also need to acknowledge that citation counts will reflect the structural inequalities that pervade the legal academy. A glance at the most common first names among law school doctrinal faculty in the United States is illustrative. In order of frequency, the 15 most common first names are Michael, David, John, Robert, Richard, James, Mark, Daniel, William, Stephen, Paul, Christopher, Thomas, Andrew, and Susan. It should be immediately apparent that this group is more male and probably a lot whiter than a random sample of the U.S. population would predict. As I said, citation counts are a measure of impact, not merit. This is not a problem with citation counts as such, qualitative assessments and reputational surveys suffer the same problem. There is no objective way to assess what the academic impact of individuals or faculties would be in an alternative universe free from racism, sexism, and ableism. A better system of ranking the academic impact of law faculties will more accurately reflect the world we live in, that increased accuracy might help make the world better at the margins, but it won’t do much to fix underlying structural inequalities.

Corrections and updates

Several schools took the opportunity to email me with corrections or updates to their faculty lists in the past three months. If I receive other corrections that might meaningfully change the rankings, I will post a revised version.